br1dging the Gap I: Working with tap devices in k8s

This is the first of several blog posts in the “br1dging the Gap” series! The series of posts will talk about the various gaps between what containers and their orchestration systems expect and what nabla containers expect.

This was a prominent theme in the writing of runnc, and we hope to be able to share a little about our design choices in runnc.

These set of posts relate to Nabla containers as well as other technologies that use a VM-like interface like VMs (i.e. Katacontainers) and Unikernels in general.

We note that the content of the blog talks about the internal workings of runnc.

In this blog post, we talk about bridging the network interfaces (pun intended - as will be very apparent later in implementation). More specifically, we will talk about the network interfaces provided by container orchestration systems (usually a veth pair), and how we bridged them to what nabla containers consume (a tap device).

Our Kubernetes setup and it’s challenges

The nabla containers are based on unikernels, and use the solo5 interface. Solo5 consumes a block device for storage and a tap device for network (since the higher level filesystem and transport layer protocols are already implemented by the LibOS - which is what gives us isolation)! Therefore, the usual way of setting up a nabla process is by creating a tap device and using a static IP or via DHCP.

However, the way networking is done in kubernetes is through a network plugin. Most commonly the Container Network Interface (CNI) plugin

is used to manage the virtualized network setup. The chosen CNI plugin used with most kubernetes clusters provides a veth pair. Therefore, there is a need to be able to connect the tap device of the unikernel to the interface setup by the CNI plugin.

It is important to note that there are CNI plugins that provide tap devices. However, we still require system containers to run as regular containers, as they may need to run as privileged host components. It is possible to set it up such that the different types of containers get their own set of interfaces (i.e. regular containers get a veth pair and nabla containers get a tap).

However, as of this moment, this requires significant change to underlying runtimes components (i.e. replacing containerd).

One way to do this is to use another CRI runtime. An example that does something like this is frakti.

Another way is to have the existing CRI runtime like containerd have the ability to recognize CNI plugin selectors from pods like the selection of the OCI runtime via RuntimeClass.

A note on other OCI consumers

Backing up for a moment from the world of kubernetes, we can’t forget that the OCI runtime interface has more consumers. One of them being our most popular way to run nabla containers, docker! In docker’s case, the default behavior is to provide a veth pair!

Our goal in runnc was to bridge the gap between the provided veth pair and tap device consumed by the nabla container.

Creating network plumbing in Kubernetes

The basic idea around the networking setup for kubernetes is that the CNI plugin will be responsible for assigning an ip address to the network interface of a kubernetes pod. A pod is a construct in kubernetes that can consist of multiple containers that share a network namespace.

Original k8s pod setup

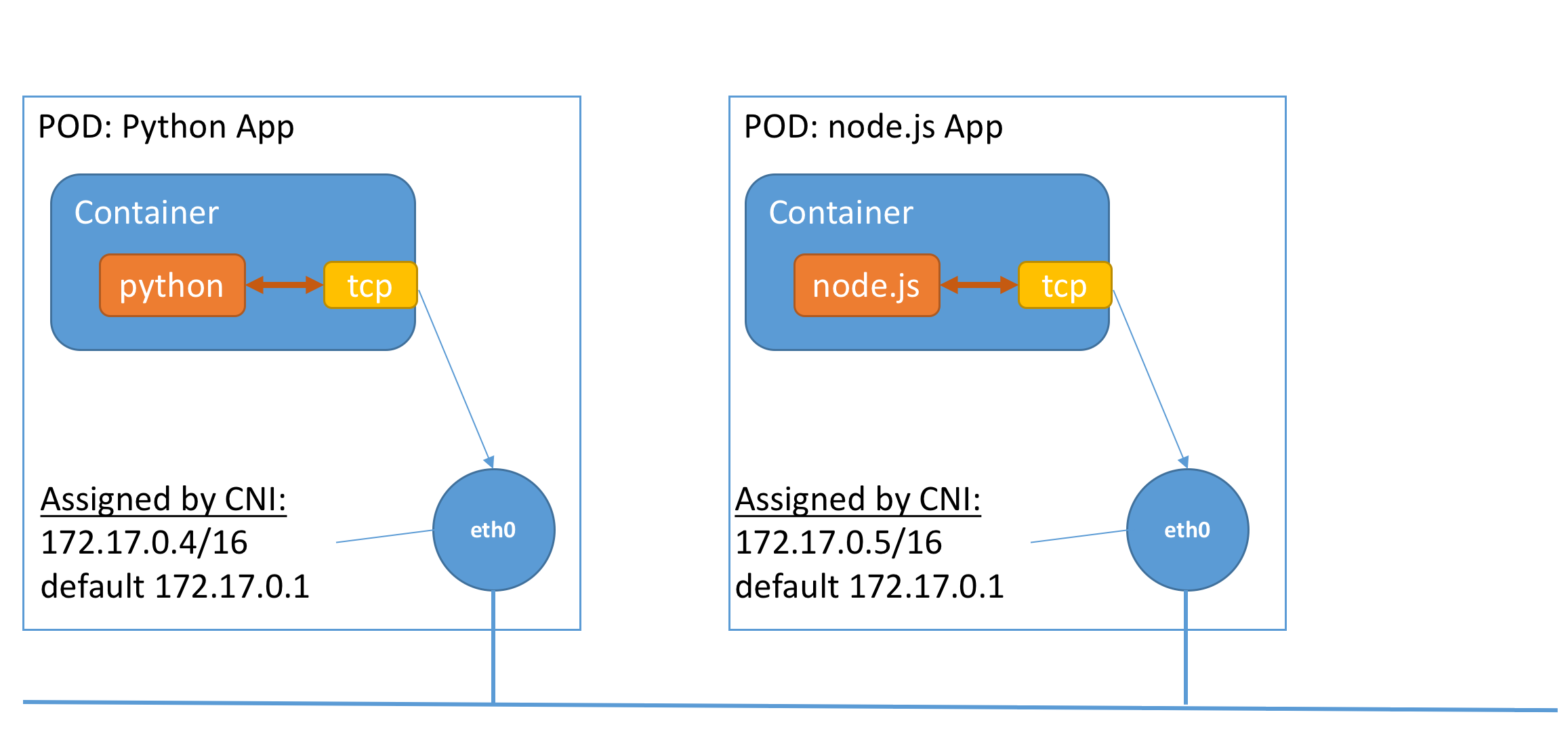

The following is an example of a typical kubernetes network setup with two pods:

We see that the pod interfaces with the rest of the network through an eth0 interface given to it. The CNI plugin is responsible for setting the IP and default gateway for the eth0 interface. There is a component in the background that does the bridging for the devices, but it is not relevant for our purposes.

We note that the typical containerized application (i.e. the python server on the left) talks to the TCP stack via a syscall interface, which uses the network configuration on eth0 to route packets.

Nabla k8s pod setup

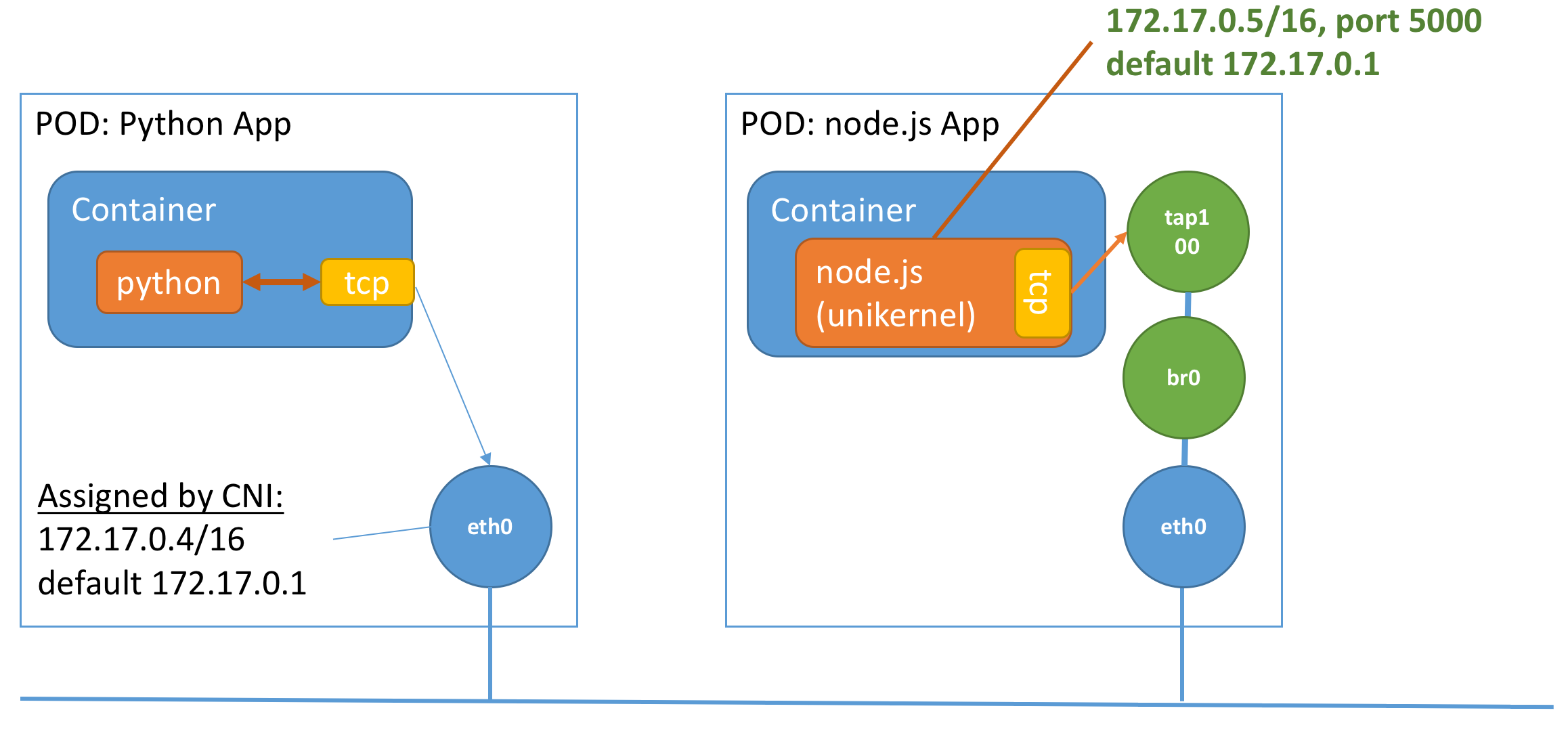

To connect the tap device of the unikernel to the interface provided by the CNI plugin, we have to perform the following steps:

- Obtain the network configuration of

eth0 - Create a TAP device (requires

NET_ADMINcapabilities),tap100 - Remove the network configuration on

eth0 - Create a bridge and bridge the

tap100toeth0 - Run the unikernel using

tap100with the network configuration originally obtained ineth0

The above steps can be done via using the bridge as described or alternatively, a MacVTap can be used (which has a lower isolation profile as well!). The runnc code that does this can be seen here. For those familiar with doing this in linux, this can also be represented by the set of commands as follows:

INET_STR=`ip addr show dev eth0 | grep "inet\ " | awk '{print $2}'`

echo $INET_STR

CIDR=`echo $INET_STR | awk -F'/' '{print $2}'`

echo $CIDR

IP_ADDR=`echo $INET_STR | awk -F'/' '{print $1}'`

GW=`ip route | grep ^default | awk '{print $3}'`

# Create tuntap device

mkdir -p /dev/net && mknod /dev/net/tun c 10 200

ip tuntap add tap100 mode tap

ip link set dev tap100 up

ip addr del $INET_STR dev eth0

ip link add name br0 type bridge

ip link set eth0 master br0

ip link set tap100 master br0

ip link set br0 up

The resultant configuration would look something like the following:

We note that as the unikernel contains it’s own tcp/ip stack, we need to pass in the necessary information in the arguments. This is done via string replacement of certain keywords. More speicifically, the script supports:

<tap>: The tap device name to use<ip>: The IP address to use<cidr>: The mask of the IP<gw>: The default gateway route to use

This can be seen in the implementation of runnc in specifying the tap here, and the network parameters here.

What’s next?

Unfortunately, this isn’t all there is to networks. There are some complications especially when it comes to working with kubernetes. The next blog will talk about those network complications - related to ARP and Routing! Stay tuned for our next blog post in the br1dging the gap series!